|

Newly developed diffusion-based techniques have showcased

phenomenal abilities in producing a wide range of high-quality images,

sparking considerable interest in various applications. A prevalent scenario

is to generate new images based on a subject from reference images.

This subject could be face identity for styled avatars, body and clothing

for virtual try-on and so on. Satisfying this requirement is evolving

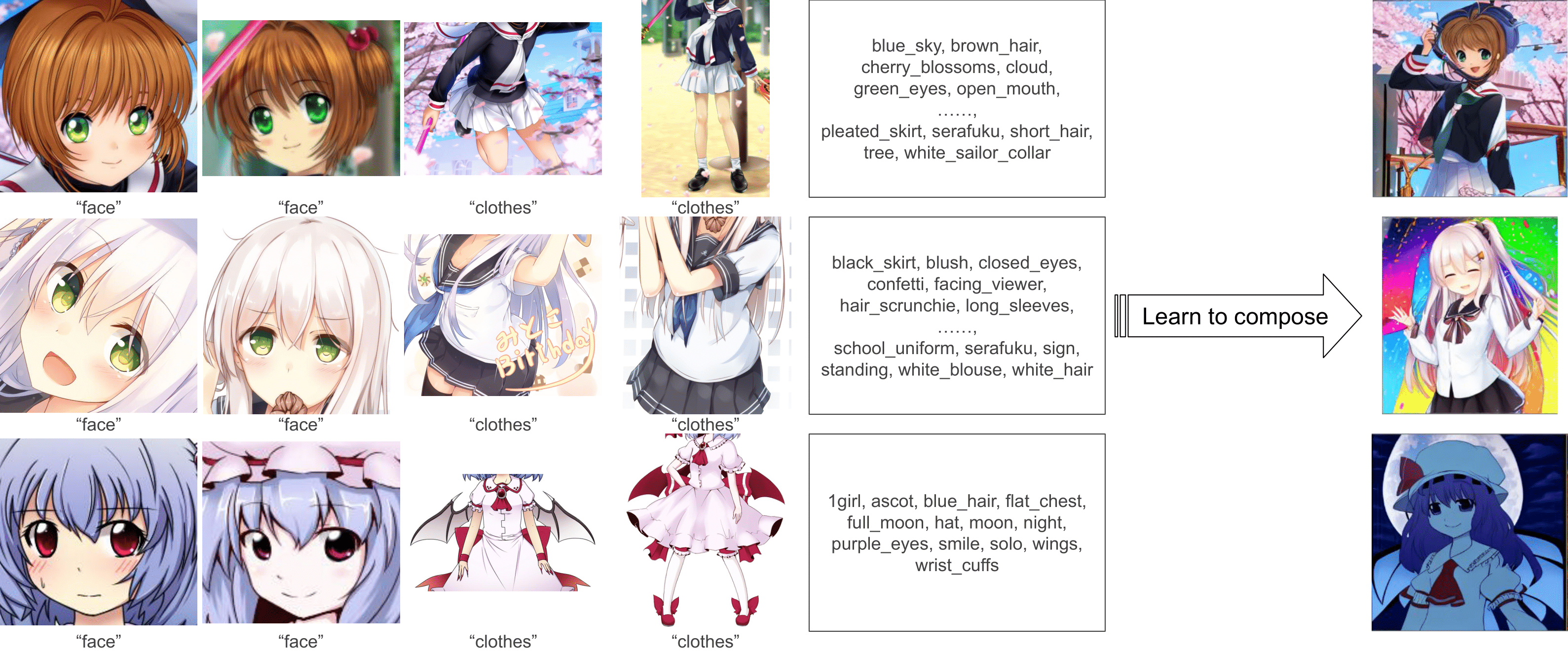

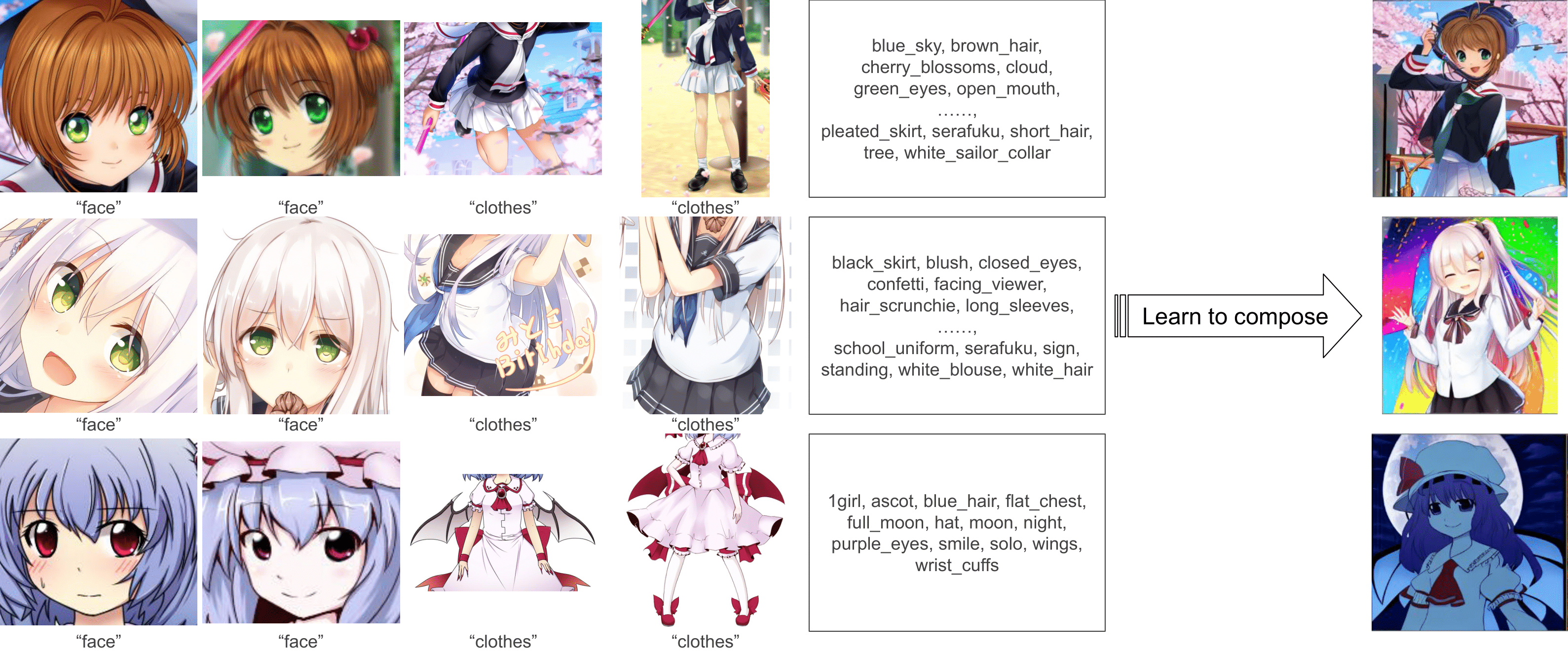

into a field called Subject-Driven Generation. In this paper, we consider

Subject-Driven Generation as a unified retrieval problem with diffusion

models. We introduce a novel diffusion model architecture, named RetriNet,

designed to address and solve these problems by retrieving subject

attributes from reference images precisely, and filter out irrelevant information.

RetriNet demonstrates impressive performance when compared

to existing state-of-the-art approaches in face generation. We further

propose a research and iteration friendly dataset, RetriBooru, to study a

more difficult problem, concept composition. Finally, to better evaluate

alignment between similarity and diversity or measure diversity that have

been previously unaccounted for, we introduce a novel class of metrics

named Similarity Weighted Diversity (SWD).

|